Education in the Knowledge Society 23 (2022)

Developing robust state-of-the-art reports: Systematic Literature Reviews

Desarrollo de estados de la cuestión robustos: Revisiones Sistemáticas de Literatura

Francisco José García-Peñalvoa

aGrupo de Investigación GRIAL, Departamento de Informática y Automática, Instituto Universitario de Ciencias de la Educación, Universidad de Salamanca, España

http://orcid.org/0000-0001-9987-5584fgarcia@usal.es

ABSTRACT

A systematic literature review is a systematic method for identifying, evaluating, and interpreting the work of scholars and practitioners in a chosen field. Its purpose is to identify gaps in knowledge and research needs in a particular field. Systematic reviews form a broad family of methods and approaches and are made absolutely necessary by the enormous volume of scientific output in digital format that is potentially accessible. However, it is not enough to label a review as systematic. This article aims to present the different phases to be carried out when conducting a systematic review. It begins with introducing the methodological frameworks for conducting systematic reviews and then goes into the phases of planning, conducting, and reporting the systematic review. So that any article bearing this label (systematic literature review), in addition to complying with methodological and transparency principles, allows any researcher not only to trust the conclusions derived from the work but also to evolve the systematic review carried out to tackle the problem derived from the obsolescence and continuous advance of scientific knowledge, in line with the FAIR data model, i.e., it meets the principles of findability, accessibility, interoperability and reuse. This article has been written in English and Spanish.

Keywords

Systematic literature review; Types of systematic literature review; SALSA; PRISMA 2020; PICOC

RESUMEN

La revisión sistemática de literatura es un método sistemático para identificar, evaluar e interpretar el trabajo de académicos y profesionales en un campo elegido. Su propósito es identificar lagunas en el conocimiento y necesidades de investigación en un campo concreto. Las revisiones sistemáticas conforman una familia amplia de métodos y aproximaciones y resultan totalmente necesarias por el volumen tan enorme de producción científica en formato digital al que se tiene potencialmente acceso. Sin embargo, no es suficiente con adjetivar una revisión como sistemática. El objetivo de este artículo es presentar las diferentes fases que se deben llevar a cabo cuando se realiza una revisión sistemática. Se comienza con la introducción de los marcos metodológicos de referencia para la realización de revisiones sistemáticas, para, a continuación, profundizar en las fases de planificación, realización e informe de la revisión sistemática. De modo que todo artículo que lleve este marbete (revisión sistemática de literatura) además de cumplir unos principios metodológicos y de transparencia, permita que cualquier investigador pueda no solo confiar en las conclusiones derivadas del trabajo, sino evolucionar la revisión sistemática realizada para atacar el problema derivado de la obsolescencia y el continuo avance del conocimiento científico, en consonancia con el modelo de datos FAIR, es decir, que se cumplen con los principios de encontrabilidad, accesibilidad, interoperabilidad y reutilización. Este artículo se ha escrito en español y en inglés.

Palabras clave

Revisión sistemática de literatura; Tipos de revisión sistemática de literatura; SALSA; PRISMA 2020; PICOC

1. Introduction

Contributions to state-of-the-art underpin advances in scientific knowledge. Therefore, knowledge of related work through a review of the existing literature is essential for good research. If the literature review is deficient, the rest of the research will be compromised as a research team cannot conduct meaningful academic work without first knowing the literature in the field of study (Boote & Beile, 2005).

Suppose the generality of research is taken to the particularity of a scientific publication, having carried out a lousy literature review, which does not adequately support the background of the work in progress to demonstrate its scientific contributions, is one of the causes that can lead to the rejection of such a publication (Randolph, 2009).

State-of-the-art is one of the classic chapters in any academic work, for example, in PhD theses, where the literature review must be innovative, reflective, and demonstrate personal growth as scientists (Daigneault et al., 2014; McGhee et al., 2007). In addition, the presentation of the state-of-the-art is essential to support the merits of new research project applications. In the case of scientific articles, the selected bibliographic sources contextualise the work and contrast the contributions concerning other related research.

However, the great effort involved in producing good state-of-the-art and evidence-based research, so widely used in the bio-health field, have led to review articles becoming very popular and widely accepted in academia.

The review article aims to identify what is known but, above all, what is unknown about the field under investigation, responding to a set of research questions that have been established promptly. Therefore, a review article is considered a detailed, selective and critical study that integrates essential information from a unitary and comprehensive perspective (Guirao-Goris et al., 2008).

The review can be recognised as a research study in itself, in which the author has a set of questions, which, together with the objective of the review, the intended outcomes and the intended audience, determines how data are identified, collected and presented (Booth et al., 2016). Data, in this case, are the primary sources, already published works, which will be analysed and from which the conclusions of the review work will be drawn. Thus, the fundamental difference between a literature review and an original paper or primary study is the unit of analysis, not the scientific principles applied (Gastel & Day, 2016).

Any research process must follow a method that systematises the work done, making it reproducible and reliable. Literature reviews are no exception. Therefore, for a review to be considered scientific research, it must be systematic, i.e., it must summarise and analyse the evidence in a structured, explicit, and systematic way concerning the research questions posed. This implies that the method used to find, select, analyse, and synthesise the primary sources must be precisely defined and documented.

1.1. Literature review types

First, a distinction must be made between systematic and non-systematic literature reviews, the latter often referred to as narrative reviews. The latter is often referred to as narrative reviews (Greenhalgh, 2019), but also known as traditional or conventional reviews.

Narrative reviews study a topic comprehensively, including various aspects. The topic is presented in a narrative format, without justifying the methods used to obtain and select the information presented (Soto & Rada, 2003). Consequently, a narrative review is likely to be poorly conducted, poorly communicated, or both (Shea et al., 2002).

Booth et al. (2016) consider that for a review might be considered systematic, it must be clear and internally valid, as well as auditable. Clarity implies a structure that is easy to navigate and interpret and a methodology that is easy to judge. Internal validity must protect the review work against bias in selecting primary papers, with relevance and rigour taking precedence. Finally, the review must be auditable to ensure the transparency of the review process, to ensure that the conclusions are based on the primary data and that arguments have not been fabricated to support a hypothesis formulated prior to the review process and to allow the review process to be reproduced by other researchers.

These premises are congruent with the characteristics that Codina (2017) establishes to consider a review as systematic: systematic (hence its name, to avoid bias and subjectivity), complete (information systems have been used that are presumed to facilitate virtual access to the entire quality production of a discipline), explicit (both the sources used and the search and selection and exclusion criteria are made known) and reproducible (other researchers are allowed to check the work, follow the steps and contrast the results obtained to determine their accuracy or degree of correctness).

In summary of the differences between a systematic review and a non-systematic review, the terms explicit, transparent, methodical, objective, standardised, structured, reproducible, creative, understandable, publishable, stimulating and well written can be associated with a properly conducted systematic review. In contrast, the terms implicit, opaque, capricious, subjective, variable, chaotic and idiosyncratic can be associated with a non-systematic review.

Focusing on systematic reviews, there are various approaches ranging from hypothesis testing to interpretative techniques. Generally speaking, they can be classified as qualitative, when the evidence is presented descriptively without statistical analysis, and quantitative or meta-analyses, when they combine the results quantitatively using statistical techniques (Letelier et al., 2005).

There are, therefore, different types of literature reviews, ranging from those characterised by a more general approach to find the most notable studies in a field, but with little emphasis on quality assessment – scoping review – to those that follow a detailed protocol, in which quality control is very present and conclude with a highly complex synthesis and analysis – gold standard systematic review –. Between these extremes, there are many variants with differences in the stages of search, appraisal, synthesis, and analysis of primary sources.

With particular attention to the synthesis of primary sources, a distinction is made between aggregative and interpretative/configurative reviews (Gough et al., 2012). Reviews that collect empirical data to describe and test predefined concepts present an aggregative logic because both the primary sources and the review aggregate empirical observations and make empirically contrasted claims about a set of predefined conceptual positions. Moreover, aggregative reviews tend to combine similar forms of data so that homogeneity of studies is of interest. On the other hand, reviews that seek to interpret and understand the world organise or shape the information and develop the concepts, being more exploratory. Even if the basic methodology is determined in advance, the specific methods are adapted or selected iteratively as the research progresses. In contrast to aggregative reviews, interpretative reviews are more oriented towards discovering patterns derived from heterogeneity.

The distinction between aggregative and interpretative reviews is based on the fact that quantitative and qualitative reviews used to involve separate tasks. However, the mixed-methods review seeks to capitalise on combining concepts and patterns with the power of numbers (Pluye & Hong, 2014). Therefore, the term integrative review is used for cases where both types of data are brought together (Whittemore & Knafl, 2005), with the idea of producing a whole that is greater than the sum of its parts.

There have been several attempts to taxonomize literature reviews. Booth et al. (2016) identify 14 types of reviews, Paré et al. (2015) present 9 types of reviews organised along 4 dimensions (summary of prior knowledge, data aggregation, explanation building, critical assessment of extant literature), Whittemore et al. (2014) include 8 types of reviews (9 if two types of meta-analysis are differentiated – meta-analysis of randomised controlled trials and meta-analysis of observational studies) and García-Holgado et al. (2020) add the systematic review of research projects. Table 1 summarises the main types of literature reviews according to Booth et al. (2016), Paré et al. (2015) and Whittemore et al. (2014). A brief description of each of the types identified in the table follows.

Table 1. Literature review types. Source: Own elaboration based on Booth et al. (2016), Paré et al. (2015) and Whittemore et al. (2014)

Overarching goal |

Literature review type |

|||

Summarization of prior knowledge |

Literature review / Narrative review |

X |

X |

|

Mapping review / Systematic map |

X |

|

|

|

Overview |

X |

|

|

|

Rapid review |

X |

|

|

|

Scoping review |

X |

X |

X |

|

State-of-the-art review |

X |

|

|

|

Descriptive review |

|

X |

|

|

Data aggregation or integration |

Integrative review |

X |

|

X |

Meta-analysis |

X |

X |

X |

|

Mixed studies review / Mixed methods review |

X |

|

X |

|

Qualitative systematic review / Qualitative evidence synthesis |

X |

X |

X |

|

Umbrella review |

X |

X |

X |

|

RE-AIM review |

|

|

X |

|

Explanation building |

Realist review |

X |

X |

|

Theoretical review |

|

X |

|

|

Critical assessment of extant literature |

Systematic review / Systematic search and review |

X |

|

X |

Critical review |

X |

X |

|

A literature review or narrative literature rereview (Green et al., 2006) is a generic term for reviewing recent or current literature, which can cover a wide range of topics with varying comprehensiveness and breadth. It often uses a selective or opportunity-based search strategy without explicit criteria for selection and quality assurance of primary sources. A narrative approach is used to synthesise and analyse the results. Examples of this type of review could be (García-Peñalvo & Seoane-Pardo, 2015; Ren et al., 2021).

The mapping review or systematic map (Grant & Booth, 2009) traces out and categorises the existing literature to commission new reviews and/or primary research, identifying gaps in the research literature. It answers broadly scoped questions to obtain a representative primary source research field sample. The selection of sources is made according to explicit criteria, and there is usually no assessment of the quality of the selected sources. Synthesis is usually based on graphs and tables, while analysis can be creative, focusing on critical data analysis, making comparisons, or identifying patterns or essential themes. Although literature mapping reviews are a specific type of review, the mapping approach can be used to present the characteristics of the dataset that has resulted from the process inherent in any other type of systematic literature review. Examples of such a review could be (Conde et al., 2021; Rincón-Flores et al., 2019).

The overview review (Oxman et al., 1994) is a generic term that deals with the summary of selected literature to survey it and describe its characteristics. It may or may not incorporate systematic aspects of the search and synthesis. The analysis may be presented in a chronological, conceptual, thematic, etc. format. Examples of such a review could be (Boulos et al., 2007; King et al., 2019).

Rapid review (Butler et al., 2005) is used to assess what is already known about a policy or practice issue, using systematic review methods to search and critically evaluate existing research. Examples of such a review might be (Bryant & Gray, 2006; Cardwell et al., 2022).

The scoping review (Daudt et al., 2013) is a preliminary assessment of the size and potential scope of the available research literature. It aims to identify the nature and scope of the research evidence (usually including ongoing research). It has a broad focus but with a comprehensive objective. Explicit selection criteria are applied, but quality criteria are not essential. Content analysis techniques are often used. Examples of such a review could be (Archer et al., 2011; Marcos-Pablos & García-Peñalvo, 2022; Veteska et al., 2022).

The state-of-the-art review (Grant & Booth, 2009) attempts to address more current issues in contrast and combination with other retrospective approaches. It may offer new perspectives on the topic or point to an area for further research. It applies a comprehensive search of the literature without evaluating the sources obtained, often combining narrative and tabular techniques to present the current state of knowledge and trends and limitations of the research field. An example of such a review could be (Gyongyosi & Imre, 2019).

Descriptive reviews (King & He, 2005) seek to determine the extent to which a set of empirical studies in a specific research area reveals interpretable patterns or trends concerning pre-existing propositions, theories, methodologies, or findings. It usually employs structured search methods to form a representative sample from a broader group of work related to the research area. Selection criteria are used, but not quality assessment criteria. An example of this type of review could be (Palvia et al., 2004).

The integrative review (Whittemore & Knafl, 2005) includes both experimental and non-experimental research to gain a deeper understanding of a phenomenon of interest. Integrative reviews combine theoretical and empirical literature data, with various search strategies being common to reach both types of sources. Primary works can be coded according to their quality but are not necessarily excluded. The analysis combines creative aspects with critical analysis of the data. An example of such a review could be (Stamp et al., 2014).

Meta-analysis (Higgins et al., 2021) statistically combines the results of quantitative studies to provide a more precise effect of the results. It tends to pursue one of these objectives primarily: (1) assess the consistency/variability of results across the primary studies included in the review (i.e., heterogeneity across studies); (2) investigate and explain (if feasible) the causes of any heterogeneity (e.g., through subgrouping or meta-regression analysis) to improve scientific understanding; (3) calculate a summary effect size together with a confidence interval; and (4) assess the robustness of the cumulative effect size through sensitivity analysis and formal assessments of potential sources of bias, including publication bias, which arises from the primary studies and could have an impact on the calculated summary effect. Examples of such a review could be (Das et al., 2022; Sung et al., 2016).

The mixed studies review or mixed methods review (Pluye et al., 2009) simultaneously examines qualitative, quantitative or mixed primary studies. For Whittemore et al. (2014), this review could be equivalent to an integrative review. An example of this type of review could be (Buck et al., 2015).

Qualitative systematic review or qualitative evidence synthesis (Candy et al., 2011) integrates or compares the results of qualitative studies. An example of such a review could be (Yu et al., 2008).

The umbrella review (Smith et al., 2011), also called a summary of reviews, is described as a tertiary study integrating evidence from different systematic reviews (qualitative or quantitative) to answer a narrow set of research questions. It has a set of criteria for selecting secondary sources and assessing their quality. An example of this type of review could be (García-Holgado & García-Peñalvo, 2018).

The RE-AIM review (Glasgow et al., 1999) aims to assess and synthesise the scope, effectiveness, adoption, implementation, and maintenance of interventions. An example of such a review could be (Blackman et al., 2013).

Realist reviews (Greenhalgh et al., 2011) (also called meta-narrative reviews or qualitative evidence synthesis reviews) are theory-driven interpretive reviews that are developed to alternatively inform, enhance, extend, or complement conventional systematic reviews, making sense of heterogeneous evidence on complex interventions applied in diverse contexts in ways that inform policy decision-making. An example of such reviews might be (Wong et al., 2010).

The theoretical review (Webster & Watson, 2002) builds on existing conceptual and empirical studies to provide a context for identifying, describing and transforming the theoretical structure and the various concepts, constructs or relationships into a higher order. Its main objective is to develop a conceptual framework or model with a set of research propositions or hypotheses. It does not necessarily incorporate criteria for the quality assessment of primary sources. An example of such a review could be (DeLone & McLean, 1992).

The systematic review or systematic search and review (Kitchenham & Charters, 2007) combines the strengths of the critical review with the comprehensive search process. It addresses broad questions to produce a synthesis of the best evidence. Examples of such reviews could be (Fornons & Palau, 2021; Vázquez-Ingelmo et al., 2019).

The critical review (Dixon-Woods et al., 2006) critically analyses the existing literature on a broad topic to reveal weaknesses, contradictions, controversies, and inconsistencies. Unlike a review that attempts to integrate existing works, a review that involves critical evaluation does not necessarily compare primary sources with each other. Instead, it compares each work against a criterion and considers it more or less acceptable. Critical reviews are selective or representative, rarely involving an exhaustive search of all relevant literature. Such reviews may explain how the review process was conducted, but they rarely assess the quality of the selected studies, especially when it comes to qualitative research. Examples of such a review could be (Balijepally et al., 2011; Bolinger et al., 2021).

1.2. Objective and organisation of the article

Systematic approaches refer to the elements of a literature review that, either individually or collectively, contribute to making the methods explicit and reproducible. Systematic approaches are evident in both the conduct and presentation of the literature review and are embodied in the formal systematic review method. Systematic approaches include (Booth et al., 2016):

•Systematic approaches to literature searching.

•Systematic approaches to quality assessment.

•Systematic approaches to literature synthesis.

•Systematic approaches for analysing the robustness and validity of review findings.

•Systematic approaches to presenting review results using narrative, tabular, numerical, and graphical approaches.

Therefore, in general terms, a systematic literature review can be defined as a systematic method for identifying, evaluating, and interpreting the work of researchers, academics, and practitioners in a chosen field (Fink, 1998).

This article aims to present the different phases to be carried out when conducting a systematic review. It begins by introducing the methodological frameworks for conducting systematic reviews and then moves on to the phases of planning, conducting, and reporting the systematic review.

2. Methodological frameworks for systematic reviews

A systematic review requires the prior definition of a review protocol, which must be followed and applied during the review phases. The protocol must be documented and published independently of the review (Torres-Torres et al., 2021) or as an integral part of it (Cruz-Benito et al., 2019).

Many methodological frameworks serve as a reference for determining search protocols in different types of systematic reviews, such as the Cochrane guide (Higgins et al., 2021), SALSA (Grant & Booth, 2009), PRISMA (Liberati et al., 2009; Page, McKenzie, et al., 2021) or PSALSAR (Mengist et al., 2020), among others.

The SALSA framework (Grant & Booth, 2009) takes its name from the four main steps in the review process: Search, Appraisal, Synthesis, and Analysis.

The search phase refers to how the search for the primary sources to be reviewed is carried out. The review protocol should state that the search should be conducted using reference databases such as WoS or Scopus. The search strategy should incorporate transparent and well-defined criteria for both inclusion and exclusion of the papers to be reviewed. Typically, this search strategy will be materialised with the choice of keywords, the corresponding search equations, and possibly applying filters of some kind, e.g., primary sources published in the last 5 or 10 years, in specific languages, etc. Therefore, the final objective of this phase is to obtain a bank of primary sources, made up of a variable number of records that will depend on the type of study, the objectives, and the inclusion and exclusion criteria applied.

The appraisal phase of the primary sources, obtained through the various searches, is carried out based on predefined criteria applied to each selected source to decide whether they will finally form part of the review. The criteria can be organised into two blocks that constitute a double filter. The first block comprises pragmatic criteria, such as the date of publication of the works, their geographical or thematic scope, etc. The second block includes the quality criteria of the primary sources, such as the quality of the research, the methodologies used, the results, etc. Therefore, this phase aims to exclude primary sources from the final corpus that do not meet the essential inclusion criteria and to ensure that those that form part of the final corpus are of sufficient quality and relevance.

The synthesis and analysis phases aim to gather and compare the results of each of the primary sources of the selected corpus after the different screening iterations by applying the inclusion/exclusion criteria and the quality assessment criteria. Specifically, the synthesis refers to the synthetic representation of each primary source, extracting its most relevant characteristics related to the research questions formulated. In the case of quantitative reviews, it will address numerical-statistical aspects through meta-synthesis techniques, while qualitative reviews may use tables or sheets to synthesise their common dimensions. The analysis phase involves the description and overall assessment of the results found. To develop the state-of-the-art, the analysis makes it possible to present a global discourse on the situation of the field of study under consideration.

The PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) framework (Hutton et al., 2016; Moher et al., 2010; Page, McKenzie, et al., 2021; Shamseer et al., 2015) is one of the most widely used frameworks for systematic review articles (in August 2020, the 2009 version of PRISMA was estimated to have been cited in the Scopus database in around 60,000 articles and recommended as a reference in more than 200 scientific journals and organisations, covering all branches of knowledge). The PRISMA framework aims to help authors improve the reporting of systematic reviews and meta-analyses. It can also be helpful for the critical appraisal of published systematic reviews. The PRISMA statement consists of a 27-item checklist (https://bit.ly/34QMZnW), which is not a systematic review quality assessment tool, and a flowchart last updated in 2020 (Page, McKenzie, et al., 2021; Page, Moher, et al., 2021).

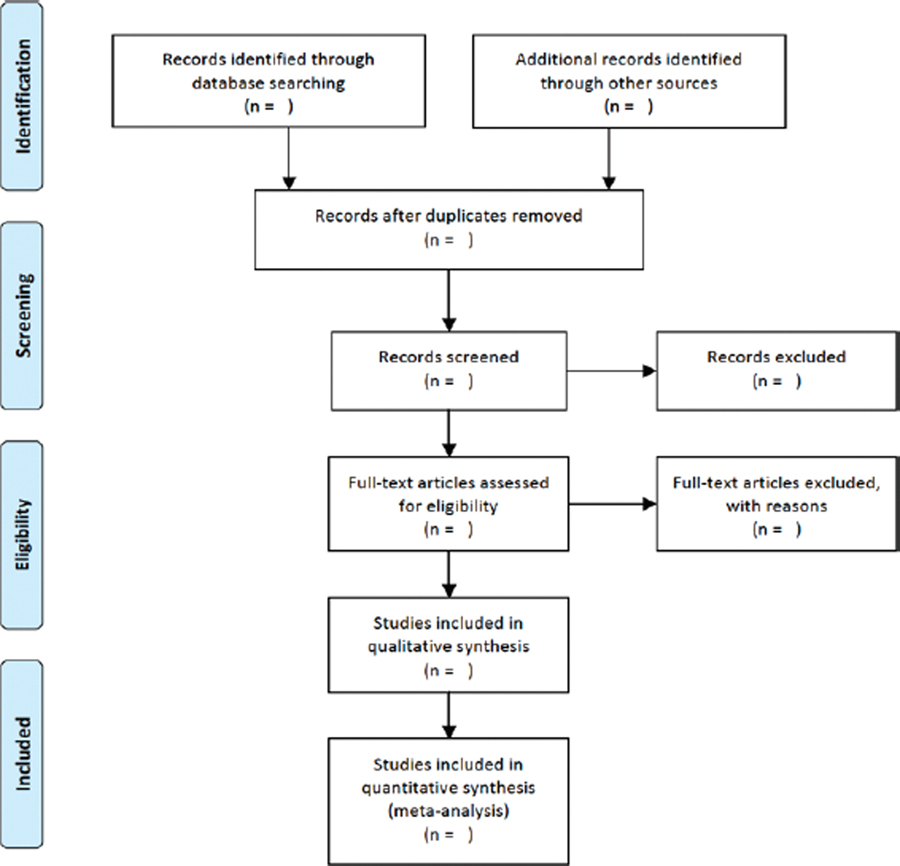

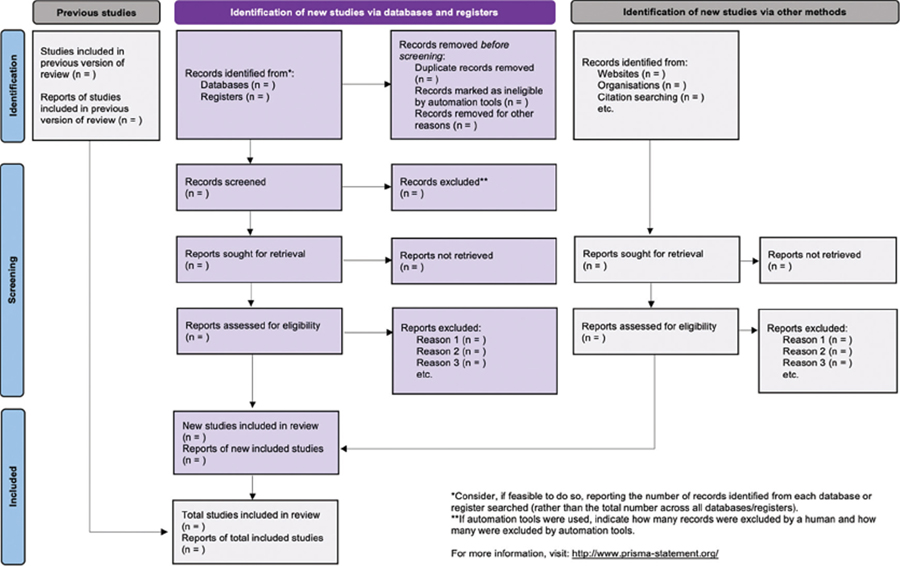

The flowchart is used in element 16 of the checklist to describe the screening from the number of records identified in the search to the number of studies finally included in the review corpus. It is essential to be clear that the PRISMA flowchart is not the process but the graphical representation of the selection phases of a systematic review.

In the 2009 version (Moher et al., 2010), the flowchart was organised in four phases, identification (to indicate the number of records found when launching the search in each database), screening (to eliminate duplicate records or records that do not meet the inclusion criteria), eligibility (to eliminate records that do not meet the marked quality criteria) and inclusion (to indicate which records form the final corpus for qualitative and quantitative synthesis - if any). This flowchart can be seen in Figure 1.

Figure 1. PRISMA 2009 Flow diagram. Source: (Moher et al., 2010)

The 2020 version of the flowchart (Page, McKenzie, et al., 2021) has merged the screening and eligibility phases into a single screening phase, resulting in a three-phase flow. The design is adapted from other flowchart proposals (Boers, 2018; Mayo‐Wilson et al., 2018; Stovold et al., 2014). The 2020 version of the flowchart is much more complete because it explicitly presents a section where sources other than databases can be included, where works cited in the selected primary sources can be contributed. In addition, it solves a problem that was not trivial to represent in the 2009 version, namely the evolution of a systematic review to incrementally incorporate new sources in an extension of the time window in which the first version of the systematic review was conducted. Figure 2 shows the PRISMA 2020 flowchart, so boxes in grey should only be filled in if applicable; otherwise, they should be removed from the flowchart.

Figure 2. PRISMA 2020 Flow diagram. Source: Adapted from (Page, McKenzie, et al., 2021)

3. Systematic review phases

Regardless of the methodological frame of reference followed, any systematic review is conducted in three phases: planning, conducting, and reporting (Genero et al., 2014; Kitchenham & Charters, 2007), summarised in Table 2.

Table 2. Systematic review phases. Source: Adapted from (Genero et al., 2014; Kitchenham & Charters, 2007).

Systematic review phases |

Stages of each phase |

Planning the systematic review |

Identification of the need for a review |

Specifying the research questions |

|

Developing a review protocol |

|

Evaluating the review protocol |

|

Conducting the review |

Identification of research |

Selection of primary studies |

|

Study quality assessment |

|

Data extraction and monitoring |

|

Data synthesis |

|

Reporting the review |

Formatting the main report |

Evaluating the report |

3.1. Planning the systematic review

The task of this phase is to define the objectives, represented in the research questions, and the protocol of the systematic review.

3.1.1. Identification of the need for a review

Carrying out a systematic review is a process that requires human resources and time, so it is essential to ask oneself whether the review is really necessary and whether one has the necessary resources to carry it out, both the aforementioned resources of people and time, as well as access to the appropriate bibliographic databases.

Of course, there is no point in doing a systematic review that has been done before unless it is clear that existing reviews are heavily biased or outdated (Petticrew & Roberts, 2005). In this sense, it is crucial to start any new review with a search for existing systematic reviews on the topic under investigation and, if different published reviews are found, to invest the time and effort necessary to analyse whether or not the contributions of these reviews require a new systematic review process.

If, in the end, it is decided to proceed with the systematic review, it is time to define the type of review to be carried out.

3.1.2. Specifying the research questions

The purpose of a systematic review is to identify knowledge gaps and research needs in a particular field. This requires a precise specification of the problem area and a critical review of the literature within that domain to present a fine line of argument that identifies the knowledge gaps and research that needs to be addressed.

Specifying the research questions is the most important part of any systematic review. The review questions drive the entire systematic review process. Therefore, it is necessary to clearly specify the questions to be answered at the beginning of the review. A process of reflection must be carried out prior to starting the review, in which the research questions will be specified in an iterative process that will involve redefining them as many times as necessary.

Research questions that are too general and do not go too deeply into the issues should be avoided. Hence, the refinement process allows for more specific questions that encompass the generic nuances of the first approaches. The aim should be to carry out a much more refined systematic review that avoids general results that would be easy to obtain with other types of non-systematic reviews. Confusing questions tend to give confusing answers (Oxman & Guyatt, 1988).

The formulation of a research question should consider that it is meaningful to researchers and/or practitioners, that it guides changes or reinforces the confidence in current practice, or that it identifies discrepancies between commonly held beliefs and reality (Kitchenham & Charters, 2007).

Suppose the type of review is a systematic mapping. In that case, the research questions can be oriented towards compiling key concepts and themes, summarizing significant findings, presenting a directory of primary sources, authors, geographical areas where research is being carried out, years of most related scientific production, etc. If the review is of a different type, but the objective is to present the selected corpus in the form of mapping, the mapping questions and the research questions should be distinguished, differentiating with a different code, for example, MQ<id> for mapping questions and RQ<id> for research questions, where <id> is a natural number, as can be seen in the example in Table 3.

Table 3. Mapping and research questions in the same review. Fuente: (Vázquez-Ingelmo et al., 2019).

Mapping questions |

Research questions |

•MQ1. How many studies were published over the years? •MQ2. Who are the most active authors in the area? •MQ3. What type of papers are published? •MQ4. To which contexts have been the variability processes applied? (BI, learning analytics, etc.) •MQ5. Which are the factors that condition the dashboards’ variability process? •MQ6. What is the target of the variability process? (visual components, KPIs, interaction, the dashboard as a whole, etc.) •MQ7. At which development stage is the variability achieved? •MQ8. Which methods have been used for enabling variability? •MQ9. How many studies have tested their proposed solutions in real environments? |

•RQ1. How have existing dashboard solutions tackled the necessity of tailoring capabilities? •RQ2. Which methods have been applied to support tailoring capabilities within the dashboards’ domain? •RQ3. How the proposed solutions manage the dashboard’s requirements? •RQ4. Can the proposed solutions be transferred to different domains? •RQ5. Has any artificial intelligence approach been applied to the dashboards' tailoring processes and, if applicable, how these approaches have been involved in the dashboards' tailoring processes? •RQ6. How mature are tailored dashboards regarding their evaluation? |

It is essential to define the scope of research questions, consistent with the type of review to be conducted. Most problems in a systematic review can be attributed to a poor definition of its scope. In essence, defining the scope involves deciding who, what and how (Ibrahim, 2008). It is helpful to use a formal structure to define the questions in a much more precise way by decomposing them into their component concepts. In order to conduct this decomposition and thus define the scope, several frameworks have been defined, such as PICO (Population, Intervention, Comparison, Outcome) (Richardson et al., 1995), SPIDER (Sample, Phenomenon of Interest, Design, Evaluation, Research type) (Cooke et al., 2012), SPICE (Setting, Perspective, Intervention/Interest, Comparison, Evaluation) (Booth, 2006), CIMO (Context-Intervention-Mechanisms-Outcomes) (Denyer & Tranfield, 2009) or PICOC (Population, Intervention, Comparison, Outcome, Context) (Petticrew & Roberts, 2005).

Of all these frameworks, PICOC is the most widely used, where its elements have the following meaning:

•Population: Who represents the problem or situation being addressed? For example, in a human population, what age, sex, socio-economic or ethnic groups are involved? What are the technical terms, synonyms, and related terms? Any restrictions on the population can be omitted in fields with fewer primary sources.

•Intervention or Exposure: How are you considering intervening in the situation? What kind of options do you have to address the problem? For example, it could be an educational intervention on plagiarism (the population of university students). In the case of non-intervention studies, it may be helpful to replace the intervention (a planned procedure) with an exposure (an unintended event), e.g., exposure to radiofrequency radiation from mobile phone antennas.

•Comparison: What is the alternative? This is optional. For when you want to consider, for example, the effect of two or more interventions, possibly comparing their outcomes in terms of what they deliver and/or cost.

•Outcomes: How do you measure? What do you want to achieve? This phase allows you to focus on the desired outcomes and assess impact: what will you measure and how.

•Context: What is the particular context of your question? Are you looking at specific countries/areas/establishments?

3.1.3. Developing a review protocol

The rigour and reliability of systematic reviews rely, in large part, on pre-planning and documenting a methodical approach to their conduct, i.e., a protocol.

Systematic review protocol is essential because 1) it allows careful planning and thus anticipation of potential problems; 2) it allows detailed documentation of what has been planned before the review begins, allowing others to compare the protocol and the completed review (i.e., identify selective information), replicate review methods if desired, and judge the validity of the planned methods; 3) avoids arbitrary decisions regarding inclusion criteria and data extraction; and 4) can reduce duplication of effort and improve collaboration (Shamseer et al., 2015).

Shamseer et al. (2015) define the systematic review protocol as the explicit scientific roadmap of a planned, non-initiated systematic review, detailing the review's rational and planned methodological and analytical approach.

A protocol, in general terms, includes 1) the final version of the research questions and their scope; 2) the inclusion, exclusion and quality criteria; and 3) the search strategy. The main elements to establish in the definition of the protocol are the following:

•Research questions (including the final version).

•Scope of the review.

•Time frame.

•Inclusion and exclusion criteria.

•Quality criteria.

•Data sources.

•Search terms.

•Canonical search equation.

Variations of these essential elements can be used, but whatever protocol is used needs to be carefully documented to be transparent and allow other researchers to follow the same procedures and obtain similar (compatible) results to those presented in the systematic review.

The research questions and their scope have already been defined in the previous step but should be included in the protocol documentation.

The time frame of the review must be adjusted to meet the objective sought, but with a view to the efficiency of the process. It is explicitly defined but is posed as an exclusion criterion and, if the search interface allows it, implemented as a constraint on the selected databases.

It is essential to define both inclusion and exclusion criteria for the selected primary sources, even though they are often antagonistic expressions. In this way, a rejection criterion can be assigned to each primary source in the dataset being handled. For ease of management, a unique identifier is usually assigned to each criterion, in the form IC<id> for inclusion criteria and EC<id> for exclusion criteria, where <id> is a natural number.

Quality criteria are used when screening primary sources that meet the inclusion criteria but may have weaknesses, shortcomings or contribute less to the research questions. The aim is to identify gaps to decide whether each paper's contribution is interesting for the systematic review or not. A checklist is designed to check the relevant aspects of the selected articles. This checklist consists of a series of criteria that will be evaluated and scored for each selected primary source according to a defined metric (Likert from 1 to N points; Binary, Tri-valued – Yes/No/Partial, etc.). Depending on the evaluation score (sum of the score of each of the items in the list), each primary source would be included or not in the final corpus of the review, for which a cut-off point on the total possible points of the defined rubric will be defined. For example, Cruz-Benito et al. (2019) propose a list of 10 items (Table 4) and a tri-valued metric, 1 if the criterion is met, 0 if it is not met, and 0.5 if it is partially met. Thus, a primary source can score between 0 and 10, and only primary sources in the first quartile, i.e., with an acceptance threshold of 7.5 points or more, are selected.

Table 4. Quality assessment checklist. Source: (Cruz-Benito et al., 2019).

Question |

Score |

1. Are the research aims related to software architectures & HCI/HMI clearly specified? 2. Was the study designed to achieve these aims? 3. Are data presented on the evaluation of the proposed solution? 4. Are data presented on the assessment regarding the human part of HCI/HMI? 5. Is the software architecture clearly described and is its design justified? 6. Are the devices involved clearly specified? Are their functions within the software architecture justified? 7. Do the researchers discuss any problem with the software architecture described? 8. Is the solution based on a software architecture tested in a real context? 9. Are the links between data, interpretation and conclusions made clear? 10. Are all research questions answered adequately? |

Y/N/Partial Y/N/Partial Y/N/Partial Y/N/Partial Y/N/Partial Y/N/Partial Y/N/Partial Y/N/Partial Y/N/Partial Y/N/Partial |

The data sources from which the primary sources of the systematic review are to be sought must be selected and justified. The suitability of each data source needs to be assessed concerning the discipline and having online access to the data source. The sources should not limit only to the most extensive databases, but researchers should be aware of the objectives sought and the effort to be made. For example, it is not the same to conduct a systematic review for a doctoral thesis to know the current state of a line of research in recent years. An important decision is to include grey literature sources (Ferreras-Fernández et al., 2015). According to the AMSTAR guidelines (Shea et al., 2017), at least two data sources should be searched, but as the number of data sources searched increases, much higher throughput and more accurate and complete results are obtained. Some examples of frequently used data sources are listed in Table 5.

Table 5. Databases. Source: Own elaboration.

Field |

Database |

Coverage |

Health |

MEDLINE/PubMed |

General medical and biomedical sciences. Includes medicine, dentistry, nursing, allied health |

PsycInfo |

Psychology and related fields |

|

Social Sciences |

Social Science Citation Index |

Social sciences |

ASSIA |

Social sciences, includes sociology, psychology and some anthropology, economics, medicine, law, and politics |

|

Social Care |

Social care online |

Social work and community care |

Social services abstracts |

Social work, human services, and related areas |

|

Education |

British Education Index |

Education (Europe) |

Education Resources Information Centre (ERIC) |

Education (US emphasis) |

|

Information Studies |

Library, Information Science Abstracts (LISA) |

Library and information sciences |

Library, Information Science and Technology Abstracts (LISTA) |

Library and information sciences |

|

Computer Sciences |

Computer and information systems abstracts |

Broad coverage of computer sciences |

ACM |

Broad coverage of computer sciences |

|

IEEEXplore |

Electrical engineering, computer science and related technologies |

|

Business and Management |

Business Source Premier |

Business research including marketing, management, accounting, finance, and economics |

Multidisciplinary |

Web of Science |

Multidisciplinary |

Scopus |

Multidisciplinary |

|

Google Scholar |

Multidisciplinary |

|

Dialnet |

Multidisciplinary (mainly centered on social sciences and humanities in Spanish) |

|

Springer |

Multidisciplinary |

|

ScienceDirect |

Multidisciplinary |

|

Emerald Insight |

Multidisciplinary |

Before formulating a search equation, the search terms must be clearly and precisely established, and then the logical relationships between them must be established. For their selection, the scope of the systematic review, i.e., the PICOC analysis, must be considered. The terms should be organised according to the search strategy that has been decided upon, and it may be necessary to define synonyms. If different languages are supported, the ontology of equivalences between the languages to be considered must be established. Wildcard characters often define families of terms with the same root and different endings. There are occasions when terms are not easy to select, and other types of analysis must be used to determine them (Marcos-Pablos & García-Peñalvo, 2020).

Once the terms for each concept within the search strategy have been identified, one or more canonical search equations using Boolean logic (using the logical operators AND, OR and NOT) should be devised to combine the terms appropriately. These canonical equations will be adapted when reviewing each of the selected data sources. Hart (2002) explains Boolean logic as a way of “adding, subtracting and multiplying search terms to expand (add), reduce (subtract) or include (multiply or combine) terms in the search”.

An efficient search equation would consist of descriptors and their corresponding qualifiers combined using the most appropriate Boolean operators:

•The OR operator (join operator) shall be used to link related concepts.

•To link terms that refer to different concepts in the same document, AND (intersection operator) shall be used.

•NOT (exclusion operator) is used to eliminate documents containing the unwanted term.

•When formulating more complex search equations, in which several operators are combined, brackets shall be used to indicate which operation should be performed first and proximity operators if these are supported by the query language of the data source.

3.1.4. Evaluating the review protocol

The protocol is a critical element of any systematic review. The researchers should agree on a procedure to evaluate the protocol. A group of independent experts could be asked to review the protocol if possible. Subsequently, the same experts could be asked to review the final report.

3.2. Conducting the review

Once the protocol is defined, the actual review work can begin with the stages of identification, selection, and inclusion of primary sources. This phase should be documented and represented visually through a flowchart, PRISMA being the most recommended, but there are other options, such as the one used in this study (Dias et al., 2018).

Furthermore, for the principle of transparency and to support traceability, all datasets handled (from the initial to the final one, passing through the different filtering applications) should be accessible to any researcher in cloud-accessible files.

3.2.1. Identification of research

In this stage, the search strategy planned in the review protocol is implemented. To do so, a search is launched in each of the selected databases, adapting the canonical equation to each interface or query language belonging to each specific database or data source.

Attempts should be made to ensure that the queries in the different data sources are equivalent; otherwise, the results obtained may not be comparable. Each adaptation of the canonical equation for each data source should be documented, considering that, if a search interface is used, many systems translate the search into a textual equation, which should be documented in the process documentation.

The records selected from each search should be exported and integrated into the management tool used to handle the positive results (Spreadsheet, Parsif.al, Mendeley, etc.).

Once the results of all searches are integrated, to end this identification stage (which corresponds to the first part of the PRISMA 2020 flowchart), the records that will not proceed to the selection stage are eliminated, typically records that are duplicates because they have been identified in more than one database. However, there may be other reasons, e.g., errors in the export of metadata, etc. Each group of records deleted for a specific reason should be recorded at this identification stage.

3.2.2. Selection of primary studies

This corresponds to the second screening stage of the PRISMA 2020 flowchart. The filtering is iteratively done in different phases. The aim is to remove as many primary sources that do not contribute to the research questions as possible in the shortest amount of time. As the number of records is reduced and the likelihood of them becoming candidates for the final review corpus increases, the time spent on their review increases.

In a first iteration, the titles and abstracts of each primary source should be reviewed, applying the previously defined inclusion and exclusion criteria. As soon as one of the exclusion criteria is met, the record is discarded. If a mixed approach involving humans and automated tools is used at this stage, how many records have been excluded by human intervention and how many by automated processes should be distinguished.

After this first filter, one should start working with the full texts of the primary sources, so a second filter would eliminate those records for which the full text is not available.

When the full text is available, it should be read in-depth. If it passes the inclusion and exclusion criteria again, it would still be a candidate for inclusion in the final corpus. This should be done with a thorough reading, although, prior to this, a quick read might be accomplished in which grasping the content of the document, but do not go into its details (reviewing the structure, introduction, conclusions, figures, tables, and references) (Keshav, 2007).

If, during the in-depth review of each paper, new primary sources are detected in its references that are candidates for inclusion, they could be selected for inspection. They will be part of the review corpus if they pass all the inclusion and exclusion criteria, even if the search strategy did not initially select them. In PRISMA 2020, there is an optional part in its flowchart to document this process (grey boxes on the right-hand side of the diagram presented in Figure 2).

When all primary sources have been thoroughly reviewed and have passed the inclusion and exclusion criteria, the set of records that are candidates to form the corpus of the review would be the set of records that are eligible to form the corpus of the review. If the protocol does not include quality criteria, this would be the set of eligible records (third phase of the PRISMA flowchart) resulting from the search strategy, which should be combined with the existing records if an incremental approach is applied based on previous review work (grey boxes on the left side of the flowchart presented in Figure 2). The next step will be taken if quality criteria apply a new filter on this set of primary sources.

3.2.3. Study quality assessment

If the review protocol requires, the quality criteria are applied to each of the primary sources that form part of the candidate set to form part of the review corpus. The researchers in charge of the systematic review set the cut-off point from which the primary sources are selected to form part of the review corpus according to the score obtained by applying the quality criteria. It should be recorded in each screening stage, which primary sources have not reached the established minimum and have therefore been excluded from the review corpus (Phelps & Campbell, 2012).

As discussed in the selection stage (when no quality criteria are applied), the review corpus is composed of these primary sources selected from the search strategy applied, combined with existing records, if an incremental approach is applied based on previous review works.

3.2.4. Data extraction and monitoring

From each primary source present in the review corpus, relevant data should be extracted to answer the research questions. Data extraction can be done simultaneously as quality assessment or separately, prior to or after this process (Barnett-Page & Thomas, 2009). We differentiate the metadata of the article and the file or URL where the full text can be found (for the documentary management of primary sources, it is recommended to use a bibliographic reference manager) and the data and/or contents of the primary source related to the research questions.

At this data extraction stage, the elements to be collected vary for each specific review and need to be guided by the research questions and objectives. If the data to be extracted are quantitative, reviewers should examine what data elements are present in each study.

It is good practice to prepare a quantitative data extraction form (which can be adapted to qualitative data), which could contain the following elements (Booth et al., 2016):

•Eligibility: an explicit statement of inclusion and exclusion criteria with the opportunity to indicate whether a study should be included in the review or not.

•Descriptive data: information on the characteristics of the study, including the setting and population.

•Quality assessment data: information on the quality of the study. Documentation may include a formal checklist.

•Results: information on the study results in the form of data to be used in the review. The data may be in a “raw” format taken directly from the document and/or in a uniform format. Ideally, they should be in both forms to indicate variation in methods, and their accuracy can be checked.

3.2.5. Data synthesis

This stage borders on the report writing phase of the systematic review, in fact, it could be carried out in conjunction with report creation, but logically it still belongs to the review conduct phase.

There are various options for conducting the synthesis, where the approach is derived from the nature of the review and its objectives, e.g., categorisation, narrative synthesis, tabular presentation, selection of key terms, data extraction, quality assessment, etc.

3.3. Reporting the review

It is the final phase of the systematic review. The aim is to document and evaluate the results of the systematic review.

3.3.1. Formatting the main report

The writing of the systematic review report should include the description and presentation of the methods followed, besides the results obtained from the selected primary sources.

This phase may consist of two steps (del Amo et al., 2018). Firstly, a document includes all the information in detail; secondly, possible academic articles that publicly present the systematic review work carried out. Therefore, the structure and scope of the report will depend on the type of document in which the results are to be presented.

Graphs, tables, and visual explanations should be used, but there must always be a section discussing the results and highlighting the contributions of the systematic review. It is good practice to include a section presenting the limitations of the systematic review study conducted.

Graphics can make a crucial contribution to synthesis because they help to identify patterns. They have a unique role in helping to visualise the relationship of the parts to the whole. They can also be used to establish links between different review features, for example, to represent a relationship between study characteristics and results. Creativity and critical analysis of data and its visualisation are crucial elements for data comparison and the identification of patterns and important and precise themes (Whittemore & Knafl, 2005). This category includes graphical representation of data, concept maps, logic models, maps, etc.

As for tables, they are a suitable tool to complete the narrative synthesis. Since tables are used to describe studies, not to analyse them, they are helpful for all types of studies. Tables can be used to describe the characteristics of the population and the intervention, make comparisons and present results, etc.

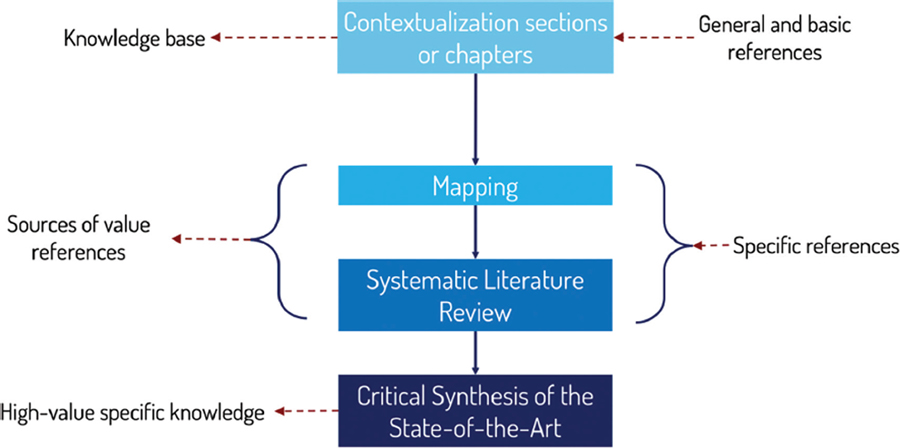

Suppose the systematic review is part of academic work, e.g., a PhD thesis. In that case, it can include a contextualisation section of the state-of-the-art based on the general references of the disciplinary field, a mapping section, a systematic review section and a discussion section of the results obtained as answers to the research questions as presented in Figure 3.

Figure 3. Structure of the state-of-the-art in a doctoral dissertation. Source: Own elaboration

When the report is focused on a scientific article, the complete systematic review is usually complicated to incorporate in its entirety for reasons of length (unless it is particular and small in size), and it will be necessary to select those parts that are most appropriate for the objective of the article.

3.3.2. Evaluating the report

The evaluation of the report that compiles all the work done in the systematic review must be evaluated internally and, if possible, externally by experts. In the case of systematic review articles, these will be subject to peer review prior to acceptance for publication.

4. Conclusions

Advancing knowledge implies knowing what has been previously achieved. Without adequate coverage of the disciplinary field in which one is working, the risks of failure increase.

Therefore, reviewing the state-of-the-art is a core activity for both new and consolidated researchers. The management of time and resources needed to carry out a good coverage of the state-of-the-art has evolved from the times when reference sources were in traditional libraries or researchers’ offices, which means an opportunity-based approach, to mass access to primary sources in digital libraries, where the problem is not the access, but the information overload that requires the application of systematic methods to be able to discern between works that really contribute to the state-of-the-art and those that simply introduce noise.

The systematic literature review approach has become the most powerful and accepted method to address the development of the much-needed state-of-the-art, applying the foundations of evidence-based research. For example, an academic work, such as a PhD thesis, is strengthened in its contextualisation when it has a systematic literature review. In this way, the formulation of its hypotheses and contributions will be much more robust, and its results are more likely to be shared in the form of an academic article.

However, one must also be aware of the limitations and risks of systematic reviews. On the one hand, the need to carry out a systematic review must be carefully assessed and, depending on the objectives pursued, the type of review must be chosen very carefully, because a study of these characteristics is very demanding in terms of time. On the other hand, it is necessary to consider the possible bias that the selected primary sources may introduce, either because the search strategy has not been adequate about the objectives pursued or because the primary sources to which the researchers have access may not be the most appropriate, mainly because of the lack of access to their full text. This is not a minor issue, as it can lead to significant differences between researchers from different institutions when they do not offer the same access to academic resources and these are not open access (García-Peñalvo, 2017; Miedema, 2022).

The reliability of the systematic review process is based on the principle of transparency. This principle means the definition and sharing of the review protocol, as well as on public access to the datasets that have been generated, together with the decisions to filter and transform one dataset into the next more refined and more interesting one to move towards the final corpus of the review. This approach provides assurances about the review process, and the conclusions reached and facilitates the reuse of these results for further development or new review work.

The evolution of a systematic review is much better covered in the PRISMA 2020 flowchart, which emphasises the importance of following a framework for developing and documenting the systematic review protocol that ensures the FAIR data model, meeting the principles of findability, accessibility, interoperability, and reuse.

References

Archer, N., Fevrier-Thomas, U., Lokker, C., McKibbon, K. A., & Straus, S. E. (2011). Personal health records: a scoping review. Journal of the American Medical Informatics Association, 18(4), 515-522. https://doi.org/10.1136/amiajnl-2011-000105

Balijepally, V., Mangalaraj, G., & Iyengar, K. (2011). Are we wielding this hammer correctly? A reflective review of the application of cluster analysis in information systems research. Journal of the Association for Information Systems, 12(5). https://doi.org/10.17705/1jais.00266

Barnett-Page, E., & Thomas, J. (2009). Methods for the synthesis of qualitative research: a critical review. BMC Medical Research Methodology, 9(1), Article 59. https://doi.org/10.1186/1471-2288-9-59

Blackman, K. C., Zoellner, J., Berrey, L. M., Alexander, R., Fanning, J., Hill, J. L., & Estabrooks, P. A. (2013). Assessing the internal and external validity of mobile health physical activity promotion interventions: a systematic literature review using the RE-AIM framework. Journal of Medical Internet Research, 15(10), Article e224. https://doi.org/10.2196/jmir.2745

Boers, M. (2018). Graphics and statistics for cardiology: Designing effective tables for presentation and publication. Heart, 104(3), 192–200. https://doi.org/10.1136/heartjnl-2017-311581

Bolinger, M. T., Josefy, M. A., Stevenson, R., & Hitt, M. A. (2021). Experiments in Strategy Research: A Critical Review and Future Research Opportunities. Journal of Management, 48(1), 77-113. https://doi.org/10.1177/01492063211044416

Boote, D., & Beile, P. (2005). Scholars before Researchers: On the Centrality of the Dissertation Literature Review in Research Preparation. Educational Researcher, 34(6), 3-15. https://doi.org/10.3102/0013189X034006003

Booth, A. (2006). Clear and present questions: formulating questions for evidence based practice. Library Hi Tech, 24(3), 355-368. https://doi.org/10.1108/07378830610692127

Booth, A., Sutton, A., & Papaioannou, D. (2016). Systematic Approaches to a Successful Literature Review (2nd ed.). Sage.

Boulos, M. N. K., Hetherington, L., & Wheeler, S. (2007). Second Life: an overview of the potential of 3-D virtual worlds in medical and health education. Health Information & Libraries Journal, 24(4), 233-245. https://doi.org/10.1111/j.1471-1842.2007.00733.x

Bryant, S. L., & Gray, A. (2006). Demonstrating the positive impact of information support on patient care in primary care: a rapid literature review. Health Information & Libraries Journal, 23(2), 118-125. https://doi.org/10.1111/j.1471-1842.2006.00652.x

Buck, H. G., Harkness, K., Wion, R., Carroll, S. L., Cosman, T., Kaasalainen, S., Kryworuchko, J., McGillion, M., O’Keefe-McCarthy, S., Sherifali, D., Strachan, P. H., & Arthur, H. M. (2015). Caregivers’ contributions to heart failure self-care: a systematic review. European Journal of Cardiovascular Nursing, 14(1), 79-89. https://doi.org/10.1177/1474515113518434

Butler, G., Deaton, S., Hodgkinson, J., Holmes, E., & Marshall, S. (2005). Quick but Not Dirty: Rapid Evidence Assessments as a Decision Support Tool in Social Policy. Government Social Research Unit.

Candy, B., King, M., Jones, L., & Oliver, S. (2011). Using qualitative synthesis to explore heterogeneity of complex interventions. BMC Medical Research Methodology, 11(1), Article 124. https://doi.org/10.1186/1471-2288-11-124

Cardwell, K., O’Neill, S. M., Tyner, B., Broderick, N., O’Brien, K., Smith, S. M., Harrington, P., Ryan, M., & O’Neill, M. (2022). A rapid review of measures to support people in isolation or quarantine during the Covid-19 pandemic and the effectiveness of such measures. Reviews in Medical Virology, 32(1), Article e2244. https://doi.org/10.1002/rmv.2244

Codina, L. (2017). Revisiones bibliográficas y cómo llevarlas a cabo con garantías: systematic reviews y SALSA Framework. Retrieved April 20th, 2017 from https://goo.gl/CG6vL5

Conde, M. Á., Rodríguez-Sedano, F. J., Fernández-Llamas, C., Gonçalves, J., Lima, J., & García-Peñalvo, F. J. (2021). Fostering STEAM through Challenge Based Learning, Robotics and Physical Devices: A systematic mapping literature review. Computer Application in Engineering Education, 29, 46-65. https://doi.org/10.1002/cae.22354

Cooke, A., D., S., & Booth, A. (2012). Beyond PICO: the SPIDER tool for qualitative evidence synthesis. Qualitative Health Research, 22(10), 1435-1443. https://doi.org/10.1177/1049732312452938

Cruz-Benito, J., García-Peñalvo, F. J., & Therón, R. (2019). Analyzing the software architectures supporting HCI/HMI processes through a systematic review of the literature. Telematics and Informatics, 38, 118-132. https://doi.org/10.1016/j.tele.2018.09.006

Daigneault, P. M., Jacob, S., & Ouimet, M. (2014). Using systematic review methods within a Ph.D. dissertation in political science: challenges and lessons learned from practice. International Journal of Social Research Methodology, 17(3), 267–283. https://doi.org/10.1080/13645579.2012.730704

Das, S., Srivastava, S., Tripathi, A., & Das, S. (2022). Meta-analysis of EMF-induced pollution by COVID-19 in virtual teaching and learning with an artificial intelligence perspective. International Journal of Web-Based Learning and Teaching Technologies, 17(4), Article 66. https://doi.org/10.4018/IJWLTT.285566

Daudt, H. M. L., van Mossel, C., & Scott, S. J. (2013). Enhancing the scoping study methodology: a large, inter-professional team’s experience with Arksey and O’Malley’s framework. BMC Medical Research Methodology, 13(1), Article 48. https://doi.org/10.1186/1471-2288-13-48

del Amo, I. F., Erkoyuncu, J. A., Roy, R., Palmarini, R., & Onoufriou, D. (2018). A systematic review of Augmented Reality contentrelated techniques for knowledge transfer in maintenance applications. Computers in Industry, 103, 47-71. https://doi.org/10.1016/j.compind.2018.08.007

DeLone, W. H., & McLean, E. R. (1992). Information systems success: The quest for the dependent variable. Information Systems Research, 3(1), 60-95. https://doi.org/10.1287/isre.3.1.60

Denyer, D., & Tranfield, D. (2009). Producing a systematic review. In D. A. Buchanan & A. Bryman (Eds.), The Sage Handbook of Organizational Research Methods (pp. 671-689). Sage.

Dias, L. P. S., Barbosa, J. L. V., & Vianna, H. D. (2018). Gamification and serious games in depression care: A systematic mapping study. Telematics and Informatics, 35, 213-224. https://doi.org/10.1016/j.tele.2017.11.002

Dixon-Woods, M., Bonas, S., Booth, A., Jones, D. R., Miller, T., Sutton, A. J., Shaw, R. L., Smith, J. A., & Young, B. (2006). How can systematic reviews incorporate qualitative research? A critical perspective. Qualitative Research, 6(1), 27-44. https://doi.org/10.1177/1468794106058867

Ferreras-Fernández, T., García-Peñalvo, F. J., & Merlo-Vega, J. A. (2015). Open access repositories as channel of publication scientific grey literature. In G. R. Alves & M. C. Felgueiras (Eds.), Proceedings of the Third International Conference on Technological Ecosystems for Enhancing Multiculturality (TEEM’15) (Porto, Portugal, October 7-9, 2015) (pp. 419-426). ACM. https://doi.org/10.1145/2808580.2808643

Fink, A. (1998). Conducting literature research reviews: from paper to the Internet. Sage.

Fornons, V., & Palau, R. (2021). Flipped Classroom in the Teaching of Mathematics: A Systematic Review. Education in the Knowledge Society, 22, Article e24409. https://doi.org/10.14201/eks.24409

García-Holgado, A., & García-Peñalvo, F. J. (2018). Mapping the systematic literature studies about software ecosystems. In F. J. García-Peñalvo (Ed.), Proceedings TEEM’18. Sixth International Conference on Technological Ecosystems for Enhancing Multiculturality (Salamanca, Spain, October 24th-26th, 2018) (pp. 910-918). ACM. https://doi.org/10.1145/3284179.3284330

García-Holgado, A., Marcos-Pablos, S., & García-Peñalvo, F. J. (2020). Guidelines for performing Systematic Research Projects Reviews. International Journal of Interactive Multimedia and Artificial Intelligence, 6(2), 136-144. https://doi.org/10.9781/ijimai.2020.05.005

García-Peñalvo, F. J. (2017). Mitos y realidades del acceso abierto. Education in the Knowledge Society, 18(1), 7-20. https://doi.org/10.14201/eks2017181720

García-Peñalvo, F. J., & Seoane-Pardo, A. M. (2015). An updated review of the concept of eLearning. Tenth anniversary. Education in the Knowledge Society, 16(1), 119-144. https://doi.org/10.14201/eks2015161119144

Gastel, B., & Day, R. (2016). How to Write and Publish a Scientific Paper (8th ed.). Greenwood.

Genero, M., Cruz-Lemus, J. A., & Piattini, M. (2014). Métodos de Investigación en Ingeniería del Software. RA-MA.

Glasgow, R. E., Vogt, T. M., & Boles, S. M. (1999). Evaluating the public health impact of health promotion interventions: The RE-AIM framework. American Journal of Public Health, 89(9), 1322-1327. https://doi.org/10.2105/AJPH.89.9.1322

Gough, D., Thomas, J., & Oliver, S. (2012). Clarifying differences between review designs and methods. Systematic Reviews, 1, Article 28. https://doi.org/10.1186/2046-4053-1-28

Grant, M. J., & Booth, A. (2009). A typology of reviews: an analysis of 14 review types and associated methodologies. Health Information and Libraries Journal, 26(2), 91-108. https://doi.org/10.1111/j.1471-1842.2009.00848.x

Green, B. N., Johnson, C. D., & Adams, A. (2006). Writing narrative literature reviews for peer-reviewed journals: secrets of the trade. Journal of Chiropractic Medicine, 5(3), 101-117. https://doi.org/10.1016/S0899-3467(07)60142-6

Greenhalgh, T. (2019). How to Read a Paper: The Basics of Evidence-based Medicine and Healthcare (6th ed.). John Wiley & Sons Ltd.

Greenhalgh, T., Wong, G., Westhorp, G., & Pawson, R. (2011). Protocol-realist and meta-narrative evidence synthesis: evolving standards (RAMESES). BMC Medical Research Methodology, 11, Article 115. https://doi.org/10.1186/1471-2288-11-115

Guirao-Goris, J. A., Olmedo Salas, A., & Ferrer Ferrandis, E. (2008). El artículo de revisión. Revista Iberoamericana de Enfermería Comunitaria, 1(1).

Gyongyosi, L., & Imre, S. (2019). A Survey on quantum computing technology. Computer Science Review, 31, 51-71. https://doi.org/10.1016/j.cosrev.2018.11.002

Hart, C. (2002). Doing a Literature Search: A Comprehensive Guide for the Social Sciences. Sage.

Higgins, J. P. T., Thomas, J., J., C., Cumpston, M., Li, T., Page, M., & Wech, V. (2021). Cochrane Handbook for Systematic Reviews of Interventions. Version 6.2. Cochrane Training. https://bit.ly/2RgWEgh

Hutton, B., Catalá-López, F., & Moher, D. (2016). The PRISMA statement extension for systematic reviews incorporating network meta-analysis: PRISMA-NMA. Medicina Clínica, 146(6), 262-266. https://doi.org/10.1016/j.medcle.2016.10.003

Ibrahim, R. (2008). Setting up a research question for determining the research methodology. ALAM CIPTA International Journal on Sustainable Tropical Design Research & Practice, 3(1), 99-102.

Keshav, S. (2007). How to read a paper. ACM SIGCOMM Computer Communication Review, 37(3), 83-84. https://doi.org/10.1145/1273445.1273458

King, A. J., Bol, N., Cummins, R. G., & John, K. K. (2019). Improving visual behavior research in communication science: An overview, review, and reporting recommendations for using eye-tracking methods. Communication Methods and Measures, 13(3), 149-177. https://doi.org/10.1080/19312458.2018.1558194

King, W. R., & He, J. (2005). Understanding the role and methods of meta-analysis in IS research. Communications of the Association for Information Systems, 16(1), 665-686. https://doi.org/10.17705/1CAIS.01632

Kitchenham, B., & Charters, S. (2007). Guidelines for performing Systematic Literature Reviews in Software Engineering. Version 2.3 [Technical Report](EBSE-2007-01). https://goo.gl/L1VHcw

Letelier, L. M., Manríquez, J. J., & Rada, G. (2005). Revisiones sistemáticas y metaanálisis: ¿son la mejor evidencia? Revista Médica de Chile, 133(2), 246-249. https://doi.org/10.4067/S0034-98872005000200015

Liberati, A., Altman, D. G., Tetzlaff, J., Mulrow, C., Gøtzsche, P. C., Ioannidis, J. P. A., Clarke, M., Devereaux, P. J., Kleijnen, J., & Moher, D. (2009). The PRISMA Statement for Reporting Systematic Reviews and Meta-Analyses of Studies That Evaluate Health Care Interventions: Explanation and Elaboration. PLOS Medicine, 6(7), Article e1000100. https://doi.org/10.1371/journal.pmed.1000100

Marcos-Pablos, S., & García-Peñalvo, F. J. (2020). Information retrieval methodology for aiding scientific database search. Soft Computing, 24(8), 5551–5560. https://doi.org/10.1007/s00500-018-3568-0

Marcos-Pablos, S., & García-Peñalvo, F. J. (2022). Emotional Intelligence in Robotics: A Scoping Review. In J. F. de Paz Santana, D. H. de la Iglesia, & A. J. López Rivero (Eds.), New Trends in Disruptive Technologies, Tech Ethics and Artificial Intelligence (pp. 66-75). Springer International Publishing. https://doi.org/10.1007/978-3-030-87687-6_7

Mayo‐Wilson, E., Li, T., Fusco, N., Dickersin, K., & MUDS investigators. (2018). Practical guidance for using multiple data sources in systematic reviews and meta‐analyses (with examples from the MUDS study). Research synthesis methods, 9(1), 2-12. https://doi.org/10.1002/jrsm.1277

McGhee, G., Marland, G. R., & Atkinson, J. (2007). Grounded theory research: literature reviewing and reflexivity. Journal of Advanced Nursing, 60(3), 334–342. https://doi.org/10.1111/j.1365-2648.2007.04436.x

Mengist, W., Soromessa, T., & Legese, G. (2020). Method for conducting systematic literature review and meta-analysis for environmental science research. MethodsX, 7. https://doi.org/10.1016/j.mex.2019.100777

Miedema, F. (2022). Open Science: the Very Idea. Springer. https://doi.org/10.1007/978-94-024-2115-6

Moher, D., Liberati, A., Tetzlaff, J., & Altman, D. G. (2010). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. International Journal of Surgery, 8, 336-341, Article e1000097. https://doi.org/10.1016/j.ijsu.2010.02.007

Oxman, A. D., Cook, D. J., & Guyatt, G. H. (1994). Users’ guides to the medical literature. VI. How to use an overview. Evidence-Based Medicine Working Group. JAMA, 272(17), 1367-1371. https://doi.org/10.1001/jama.1994.03520170077040

Oxman, A. D., & Guyatt, G. H. (1988). Guidelines for reading literature reviews. Canadian Medical Association Journal, 138(8), 697-703.

Page, M. J., McKenzie, J. E., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., McGuinness, L. A., Stewart, L. A., Thomas, J., Tricco, A. C., Welch, V. A., Whiting, P., & Moher, D. (2021). The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ, 372, Article n71. https://doi.org/10.1136/bmj.n71

Page, M. J., Moher, D., Bossuyt, P. M., Boutron, I., Hoffmann, T. C., Mulrow, C. D., Shamseer, L., Tetzlaff, J. M., Akl, E. A., Brennan, S. E., Chou, R., Glanville, J., Grimshaw, J. M., Hróbjartsson, A., Lalu, M. M., Li, T., Loder, E. W., Mayo-Wilson, E., McDonald, S., McGuinness, L. A., Stewart, L. A., Thomas, J., Tricco, A. C., Welch, V. A., Whiting, P., & McKenzie, J. E. (2021). PRISMA 2020 explanation and elaboration: updated guidance and exemplars for reporting systematic reviews. BMJ, 372, Article n160. https://doi.org/10.1136/bmj.n160

Palvia, P., Leary, D., Mao, E., Midha, V., Pinjani, P., & Salam, A. F. (2004). Research methodologies in MIS: An update. The Communications of the Association for Information Systems, 14, 526-542. https://doi.org/10.17705/1CAIS.01424

Paré, G., Trudel, M. C., Jaana, M., & Kitsiou, S. (2015). Synthesizing information systems knowledge: a typology of literature reviews. Information & Management, 52(2), 183-199. https://doi.org/10.1016/j.im.2014.08.008

Petticrew, M., & Roberts, H. (2005). Systematic reviews in the social sciences: A practical guide. John Wiley & Sons.

Phelps, S. F., & Campbell, N. (2012). Systematic Reviews in Theory and Practice for Library and Information Studies. Library and Information Research, 36(112), 6-15.